A framework based on advanced AI techniques can solve complex, computationally intensive problems faster and in a more scalable way than state-of-the-art methods, according to a study led by engineers at the University of California San Diego.

In the paper, which was published in Nature Machine Intelligence, researchers present HypOp, a framework that uses unsupervised learning and hypergraph neural networks. The framework is able to solve combinatorial optimization problems significantly faster than existing methods. HypOp is also able to solve certain combinatorial problems that can’t be solved as effectively by prior methods.

“In this paper, we tackle the difficult task of addressing combinatorial optimization problems that are paramount in many fields of science and engineering,” said Nasimeh Heydaribeni, the paper’s corresponding author and a postdoctoral scholar in the UC San Diego Department of Electrical and Computer Engineering. She is part of the research group of Professor Farinaz Koushanfar, who co-directs the Center for Machine-Intelligence, Computing and Security at the UC San Diego Jacobs School of Engineering. Professor Tina Eliassi-Rad from Northeastern University also collaborated with the UC San Diego team on this project.

One example of a relatively simple combinatorial problem is figuring out how many and what kind of goods to stock at specific warehouses in order to consume the least amount of gas when delivering these goods.

HypOp can be applied to a broad spectrum of challenging real-world problems, with applications in drug discovery, chip design, logic verification, logistics and more. These are all combinatorial problems with a wide range of variables and constraints that make them extremely difficult to solve. That is because in these problems, the size of the underlying search space for finding potential solutions increases exponentially rather than in a linear fashion with respect to the problem size.

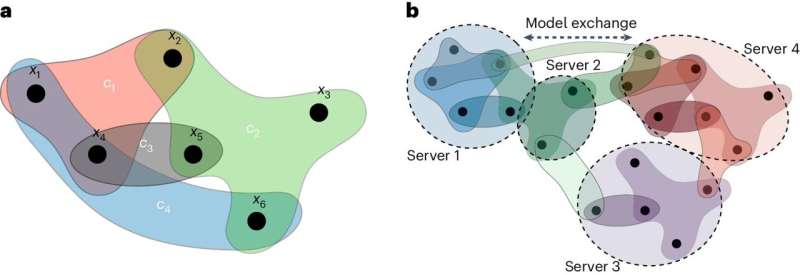

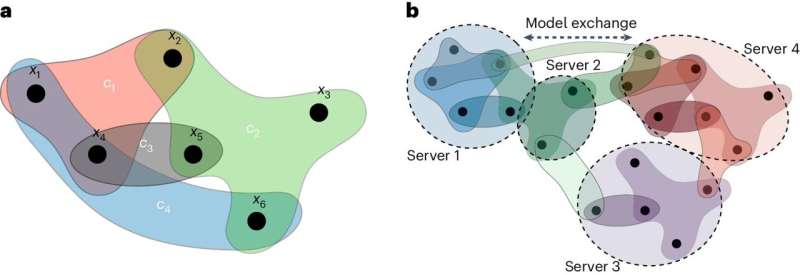

HypOp can solve these complex problems in a more scalable manner by using a new distributed algorithm that allows multiple computation units on the hypergraph to solve the problem together, in parallel, more efficiently.

HypOp introduces new problem embedding leveraging hypergraph neural networks, which have higher order connections than traditional graph neural networks, to better model the problem constraints and solve them more proficiently. can also transfer learning from one problem to help solve other, seemingly different problems more effectively. HypOp includes an additional fine-tuning step, which leads to finding more accurate solutions than the prior existing methods.

The code for HypOp is available here.

Below, the UC San Diego research team on this paper breaks down the findings for a broader audience though a short Q&A.

You note in the press release that HypOp also transfer-learns from one type of problem objective to help solve other cost functions more effectively. For a non-technical expert, is there more to say about this phenomenon that is relevant to the larger conversation about how AI is empowering researchers to solve problems and make discoveries that would otherwise be impossible?

HypOp’s ability to transfer-learn from one problem to assist in solving others is a prime example of how AI can introduce a paradigm shift in research and discovery. This capability, known as transfer learning, allows AI systems to consign knowledge gained from solving one problem to new but related problems with a different cost function, making them more versatile and efficient.

For non-technical experts, consider how human expertise works. For instance, learning piano creates a comprehensive musical foundation that makes learning guitar faster and more effective. The transferable skills include music theory knowledge, reading proficiency, rhythmic understanding, finger dexterity, and aural abilities. These skills collectively enhance the learning experience and lead to quicker and better mastery of the guitar for someone who already knows how to play the piano. In comparison, a novice music student would have a much longer learning curve.

This synergy between human intelligence and AI amplifies researchers’ ability to address complex, interdisciplinary challenges and drive progress in ways that were previously unimaginable. That is one reason why we are very excited about HypOp’s advancements and contributions.

There is a lot of conversation in many different circles about using machine learning and artificial intelligence to help researchers make discoveries faster, or even to make discoveries that would otherwise be impossible. For people who may not understand all the technical details of your new paper, how influential do you believe this new approach, HypOp, will be in terms of how AI is used in problem solving and research?

The overarching concept is that learning the pertinent problem structure can greatly enhance the quality and speed of combinatorial optimization problems. HypOp’s particular methodology holds a significant potential for influencing the way AI is applied in problem solving and research. By leveraging hypergraph neural networks (HyperGNNs), HypOp extends the capabilities of traditional graph neural networks to scalably tackle higher-order constrained combinatorial optimization problems. This advancement is crucial because many real-world problems involve complex constraints and interactions that go beyond simple pairwise relationships that have been suggested earlier.

The code for HypOp is available online. Do you expect people will start using the code right away to solve combinatorial optimization problems? Or is there more work to be done before people can start using the code?

Yes, people can start using the HypOp open-source code right away to solve large-scale combinatorial optimization problems.

What problems is HypOp able to solve that other methods can’t tackle?

HypOp can solve large-scale optimization problems with generic objective functions and constraints. Most of the existing solvers can only solve problems with specific objective functions such as linear or quadratic functions and can only model pairwise constraints. Moreover, HypOp leverages distributed training techniques which enables it to scale to substantial problem instances.

What are the next steps in terms of research for HypOp?

We are focused on extending the generalizability and scalability of HypOp. We are doing so by designing other advanced AI techniques that are capable of learning from addressing smaller problem instances and generalizing to larger problem cases.

More information:

Nasimeh Heydaribeni et al, Distributed constrained combinatorial optimization leveraging hypergraph neural networks, Nature Machine Intelligence (2024). DOI: 10.1038/s42256-024-00833-7

University of California – San Diego

Advanced AI-based techniques scale-up solving complex combinatorial optimization problems (2024, June 10)

retrieved 10 June 2024

from https://techxplore.com/news/2024-06-advanced-ai-based-techniques-scale.html

part may be reproduced without the written permission. The content is provided for information purposes only.

A framework based on advanced AI techniques can solve complex, computationally intensive problems faster and in a more scalable way than state-of-the-art methods, according to a study led by engineers at the University of California San Diego.

In the paper, which was published in Nature Machine Intelligence, researchers present HypOp, a framework that uses unsupervised learning and hypergraph neural networks. The framework is able to solve combinatorial optimization problems significantly faster than existing methods. HypOp is also able to solve certain combinatorial problems that can’t be solved as effectively by prior methods.

“In this paper, we tackle the difficult task of addressing combinatorial optimization problems that are paramount in many fields of science and engineering,” said Nasimeh Heydaribeni, the paper’s corresponding author and a postdoctoral scholar in the UC San Diego Department of Electrical and Computer Engineering. She is part of the research group of Professor Farinaz Koushanfar, who co-directs the Center for Machine-Intelligence, Computing and Security at the UC San Diego Jacobs School of Engineering. Professor Tina Eliassi-Rad from Northeastern University also collaborated with the UC San Diego team on this project.

One example of a relatively simple combinatorial problem is figuring out how many and what kind of goods to stock at specific warehouses in order to consume the least amount of gas when delivering these goods.

HypOp can be applied to a broad spectrum of challenging real-world problems, with applications in drug discovery, chip design, logic verification, logistics and more. These are all combinatorial problems with a wide range of variables and constraints that make them extremely difficult to solve. That is because in these problems, the size of the underlying search space for finding potential solutions increases exponentially rather than in a linear fashion with respect to the problem size.

HypOp can solve these complex problems in a more scalable manner by using a new distributed algorithm that allows multiple computation units on the hypergraph to solve the problem together, in parallel, more efficiently.

HypOp introduces new problem embedding leveraging hypergraph neural networks, which have higher order connections than traditional graph neural networks, to better model the problem constraints and solve them more proficiently. can also transfer learning from one problem to help solve other, seemingly different problems more effectively. HypOp includes an additional fine-tuning step, which leads to finding more accurate solutions than the prior existing methods.

The code for HypOp is available here.

Below, the UC San Diego research team on this paper breaks down the findings for a broader audience though a short Q&A.

You note in the press release that HypOp also transfer-learns from one type of problem objective to help solve other cost functions more effectively. For a non-technical expert, is there more to say about this phenomenon that is relevant to the larger conversation about how AI is empowering researchers to solve problems and make discoveries that would otherwise be impossible?

HypOp’s ability to transfer-learn from one problem to assist in solving others is a prime example of how AI can introduce a paradigm shift in research and discovery. This capability, known as transfer learning, allows AI systems to consign knowledge gained from solving one problem to new but related problems with a different cost function, making them more versatile and efficient.

For non-technical experts, consider how human expertise works. For instance, learning piano creates a comprehensive musical foundation that makes learning guitar faster and more effective. The transferable skills include music theory knowledge, reading proficiency, rhythmic understanding, finger dexterity, and aural abilities. These skills collectively enhance the learning experience and lead to quicker and better mastery of the guitar for someone who already knows how to play the piano. In comparison, a novice music student would have a much longer learning curve.

This synergy between human intelligence and AI amplifies researchers’ ability to address complex, interdisciplinary challenges and drive progress in ways that were previously unimaginable. That is one reason why we are very excited about HypOp’s advancements and contributions.

There is a lot of conversation in many different circles about using machine learning and artificial intelligence to help researchers make discoveries faster, or even to make discoveries that would otherwise be impossible. For people who may not understand all the technical details of your new paper, how influential do you believe this new approach, HypOp, will be in terms of how AI is used in problem solving and research?

The overarching concept is that learning the pertinent problem structure can greatly enhance the quality and speed of combinatorial optimization problems. HypOp’s particular methodology holds a significant potential for influencing the way AI is applied in problem solving and research. By leveraging hypergraph neural networks (HyperGNNs), HypOp extends the capabilities of traditional graph neural networks to scalably tackle higher-order constrained combinatorial optimization problems. This advancement is crucial because many real-world problems involve complex constraints and interactions that go beyond simple pairwise relationships that have been suggested earlier.

The code for HypOp is available online. Do you expect people will start using the code right away to solve combinatorial optimization problems? Or is there more work to be done before people can start using the code?

Yes, people can start using the HypOp open-source code right away to solve large-scale combinatorial optimization problems.

What problems is HypOp able to solve that other methods can’t tackle?

HypOp can solve large-scale optimization problems with generic objective functions and constraints. Most of the existing solvers can only solve problems with specific objective functions such as linear or quadratic functions and can only model pairwise constraints. Moreover, HypOp leverages distributed training techniques which enables it to scale to substantial problem instances.

What are the next steps in terms of research for HypOp?

We are focused on extending the generalizability and scalability of HypOp. We are doing so by designing other advanced AI techniques that are capable of learning from addressing smaller problem instances and generalizing to larger problem cases.

More information:

Nasimeh Heydaribeni et al, Distributed constrained combinatorial optimization leveraging hypergraph neural networks, Nature Machine Intelligence (2024). DOI: 10.1038/s42256-024-00833-7

University of California – San Diego

Advanced AI-based techniques scale-up solving complex combinatorial optimization problems (2024, June 10)

retrieved 10 June 2024

from https://techxplore.com/news/2024-06-advanced-ai-based-techniques-scale.html

part may be reproduced without the written permission. The content is provided for information purposes only.