Last year, the Writers Guild of America (WGA) labor union, which represents film and TV writers, went on strike for nearly five months, in part to regulate AI’s role in scriptwriting. “Alexa will not replace us,” read one picket sign.

Now, researchers at Penn Engineering, Haverford College, and Penn State have presented a paper at the 2024 Association of Computing Machinery Conference on Fairness, Accountability and Transparency (ACM FAccT) that identifies a previously unreported drawback to writing scripts using OpenAI’s ChatGPT: content moderation so overzealous that even some PG-rated scripts are censored, potentially limiting artistic expression.

The research is published in The 2024 ACM Conference on Fairness, Accountability, and Transparency.

The guidelines established by the agreement between the WGA and the Association of Motion Picture and Television Producers (AMPTP) that ended the strike permitted certain uses of AI in scriptwriting. While both the WGA and AMPTP agreed that AI cannot be credited as a writer, they allowed the use of AI as a tool in the creative process.

The new study raises questions about the efficacy of this approach, showing that automated content moderation restricts ChatGPT from producing content that has already been permitted on television. ChatGPT’s automated content moderation filters for topics including violence, sexuality and hate speech to prevent the generation of inappropriate or dangerous content.

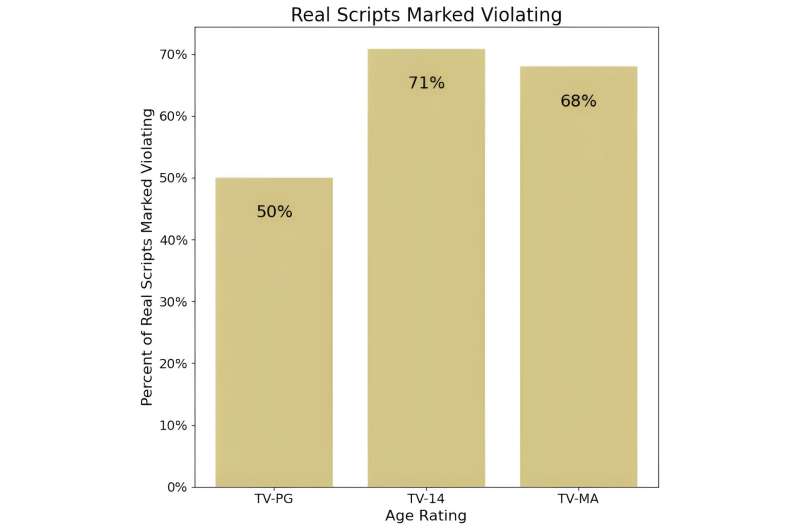

In the study, which examined both real and ChatGPT-generated scripts for IMDb’s 100 most-watched television shows, including Game of Thrones, Stranger Things and 13 Reasons Why, ChatGPT flagged nearly 20% of scripts that ChatGPT itself generated for content violations, and nearly 70% of actual scripts from the TV shows on the list, including half of tested PG-rated shows.

“If AI is used to generate cultural content, such as TV scripts, what stories won’t be told?” write the paper’s co-senior authors, Danaë Metaxa, Raj and Neera Singh Assistant Professor in Computer and Information Science (CIS) at Penn Engineering, and Sorelle Friedler, Shibulal Family Computer Science Professor at Haverford College.

“We tested real scripts,” says Friedler, “and 69% of them wouldn’t make it through the content filters, including even some of the PG-rated ones. That really struck me as indicative of the system being a little overager to filter out content.”

Prompted by the writers’ strike, the project began with Friedler and Metaxa wondering if a large language model (LLM) like ChatGPT could actually produce a high-quality script. “We started trying to produce scripts with LLMs,” recalls Metaxa, “and we found that before we could even get to the question of whether the script is high quality, in many cases we were not able to get the LLM to generate a script at all.”

In one instance, given a prompt drawn from a summary of an episode of Game of Thrones, ChatGPT declined to produce the script and responded with a red warning, “This content may violate our usage policies.”

To study ChatGPT’s content moderation system, the researchers employed a technique known as an “algorithm audit,” which draws conclusions about software whose internal workings remain proprietary by analyzing the software’s outputs.

The team, which also included first author Yaaseen Mahomed, a recent master’s graduate in CIS at Penn Engineering, Charlie M. Crawford, an undergraduate at Haverford, and Sanjana Gautam, a Ph.D. student in Informatics at Penn State, repeatedly queried ChatGPT, asking it to write scripts based on summaries of TV show episodes pulled from the Internet Movie Database (IMDb) and Wikipedia.

For each script request, the team probed ChatGPT’s “content moderation endpoint,” a tool accessible to programmers that returns a list of 11 categories of prohibited content (including “hate,” “sexual” and “self-harm”) and indicates which categories, if any, were triggered by the prompt, as well as a score between 0 and 1 of ChatGPT’s confidence in its assessment of a violation for each category.

In effect, this approach allowed the team to determine why certain script-writing requests were censored, and to deduce the sensitivity of ChatGPT’s content moderation settings to particular topics, genres and age ratings.

As the paper’s authors acknowledge, content moderation is an essential part of LLMs, since removing inappropriate content from the models’ training data is extremely difficult. “If you don’t bake in some form of content moderation,” says Friedler, “then these models will spew violent and racist language at you.”

Still, as the researchers found, overzealous content moderation can easily tip into censorship and limit artistic expression. Aggregating over 250,000 outputs from the content moderation endpoint allowed the researchers to observe patterns in ChatGPT’s choice to permit (or not permit) itself to write certain scripts.

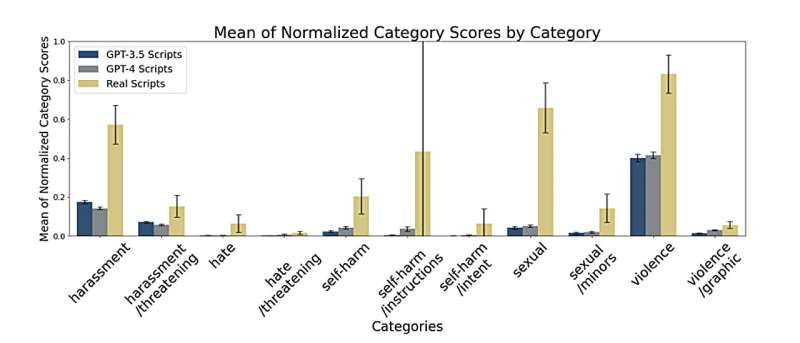

Among the researchers’ most notable findings is that different categories of potentially harmful content flag at different rates. The researchers found that scripts were very frequently flagged for violent content, driving many of the other findings, such as a high likelihood of flagging for crime and horror shows. Real scripts had high relative scores for sexual content, while GPT-generated scripts were less likely to generate content deemed inappropriately sexual in the first place.

In many cases, content seen as appropriate for TV viewers—and watched by millions of fans—was still identified as a content violation by Open AI.

TV scripts that mention self-harm, for instance, could be dangerous, or a form of artistic expression. “We need to be talking about topics like self-harm,” says Metaxa, “but with a level of care and nuance, and it’s just not in the interest of a company producing this kind of tool to put in the enormous amount of effort that it would require to walk that line carefully.”

One aspect of ChatGPT that the researchers hope to explore further is the extent to which the software’s content moderation settings filter out content related to marginalized identities. As Friedler puts it, “This type of filtering may filter out some voices and some representations of human life more than others.”

Indeed, the researchers found that ChatGPT was more likely to flag scripts describing female nudity as improperly sexual than scripts describing male nudity, and that ChatGPT was more likely to rate scripts that included descriptions of disabilities and mental illness as violent, although the researchers say that both trends need to be further investigated.

“Ironically,” says Metaxa, “the groups that are likely to be hurt by hate speech that might spew from an LLM without guardrails are the same groups that are going to be hurt by over-moderation that restricts an LLM from speaking about certain types of marginalized identities.”

In the context of the recent strike, the researchers affirm the necessity of both content moderation and artistic expression, neither of which they believe should be left entirely in the hands of autonomous systems. “Content moderation is far from a solved problem and undeniably important,” the researchers conclude. “But the solution to these issues must not be censorship.”

This study was conducted at the University of Pennsylvania School of Engineering and Applied Science, Haverford College and The Pennsylvania State University.

More information:

Yaaseen Mahomed et al, Auditing GPT’s Content Moderation Guardrails: Can ChatGPT Write Your Favorite TV Show?, The 2024 ACM Conference on Fairness, Accountability, and Transparency (2024). DOI: 10.1145/3630106.3658932

University of Pennsylvania

Censoring creativity: The limits of ChatGPT for scriptwriting (2024, June 12)

retrieved 12 June 2024

from https://techxplore.com/news/2024-06-censoring-creativity-limits-chatgpt-scriptwriting.html

part may be reproduced without the written permission. The content is provided for information purposes only.

Last year, the Writers Guild of America (WGA) labor union, which represents film and TV writers, went on strike for nearly five months, in part to regulate AI’s role in scriptwriting. “Alexa will not replace us,” read one picket sign.

Now, researchers at Penn Engineering, Haverford College, and Penn State have presented a paper at the 2024 Association of Computing Machinery Conference on Fairness, Accountability and Transparency (ACM FAccT) that identifies a previously unreported drawback to writing scripts using OpenAI’s ChatGPT: content moderation so overzealous that even some PG-rated scripts are censored, potentially limiting artistic expression.

The research is published in The 2024 ACM Conference on Fairness, Accountability, and Transparency.

The guidelines established by the agreement between the WGA and the Association of Motion Picture and Television Producers (AMPTP) that ended the strike permitted certain uses of AI in scriptwriting. While both the WGA and AMPTP agreed that AI cannot be credited as a writer, they allowed the use of AI as a tool in the creative process.

The new study raises questions about the efficacy of this approach, showing that automated content moderation restricts ChatGPT from producing content that has already been permitted on television. ChatGPT’s automated content moderation filters for topics including violence, sexuality and hate speech to prevent the generation of inappropriate or dangerous content.

In the study, which examined both real and ChatGPT-generated scripts for IMDb’s 100 most-watched television shows, including Game of Thrones, Stranger Things and 13 Reasons Why, ChatGPT flagged nearly 20% of scripts that ChatGPT itself generated for content violations, and nearly 70% of actual scripts from the TV shows on the list, including half of tested PG-rated shows.

“If AI is used to generate cultural content, such as TV scripts, what stories won’t be told?” write the paper’s co-senior authors, Danaë Metaxa, Raj and Neera Singh Assistant Professor in Computer and Information Science (CIS) at Penn Engineering, and Sorelle Friedler, Shibulal Family Computer Science Professor at Haverford College.

“We tested real scripts,” says Friedler, “and 69% of them wouldn’t make it through the content filters, including even some of the PG-rated ones. That really struck me as indicative of the system being a little overager to filter out content.”

Prompted by the writers’ strike, the project began with Friedler and Metaxa wondering if a large language model (LLM) like ChatGPT could actually produce a high-quality script. “We started trying to produce scripts with LLMs,” recalls Metaxa, “and we found that before we could even get to the question of whether the script is high quality, in many cases we were not able to get the LLM to generate a script at all.”

In one instance, given a prompt drawn from a summary of an episode of Game of Thrones, ChatGPT declined to produce the script and responded with a red warning, “This content may violate our usage policies.”

To study ChatGPT’s content moderation system, the researchers employed a technique known as an “algorithm audit,” which draws conclusions about software whose internal workings remain proprietary by analyzing the software’s outputs.

The team, which also included first author Yaaseen Mahomed, a recent master’s graduate in CIS at Penn Engineering, Charlie M. Crawford, an undergraduate at Haverford, and Sanjana Gautam, a Ph.D. student in Informatics at Penn State, repeatedly queried ChatGPT, asking it to write scripts based on summaries of TV show episodes pulled from the Internet Movie Database (IMDb) and Wikipedia.

For each script request, the team probed ChatGPT’s “content moderation endpoint,” a tool accessible to programmers that returns a list of 11 categories of prohibited content (including “hate,” “sexual” and “self-harm”) and indicates which categories, if any, were triggered by the prompt, as well as a score between 0 and 1 of ChatGPT’s confidence in its assessment of a violation for each category.

In effect, this approach allowed the team to determine why certain script-writing requests were censored, and to deduce the sensitivity of ChatGPT’s content moderation settings to particular topics, genres and age ratings.

As the paper’s authors acknowledge, content moderation is an essential part of LLMs, since removing inappropriate content from the models’ training data is extremely difficult. “If you don’t bake in some form of content moderation,” says Friedler, “then these models will spew violent and racist language at you.”

Still, as the researchers found, overzealous content moderation can easily tip into censorship and limit artistic expression. Aggregating over 250,000 outputs from the content moderation endpoint allowed the researchers to observe patterns in ChatGPT’s choice to permit (or not permit) itself to write certain scripts.

Among the researchers’ most notable findings is that different categories of potentially harmful content flag at different rates. The researchers found that scripts were very frequently flagged for violent content, driving many of the other findings, such as a high likelihood of flagging for crime and horror shows. Real scripts had high relative scores for sexual content, while GPT-generated scripts were less likely to generate content deemed inappropriately sexual in the first place.

In many cases, content seen as appropriate for TV viewers—and watched by millions of fans—was still identified as a content violation by Open AI.

TV scripts that mention self-harm, for instance, could be dangerous, or a form of artistic expression. “We need to be talking about topics like self-harm,” says Metaxa, “but with a level of care and nuance, and it’s just not in the interest of a company producing this kind of tool to put in the enormous amount of effort that it would require to walk that line carefully.”

One aspect of ChatGPT that the researchers hope to explore further is the extent to which the software’s content moderation settings filter out content related to marginalized identities. As Friedler puts it, “This type of filtering may filter out some voices and some representations of human life more than others.”

Indeed, the researchers found that ChatGPT was more likely to flag scripts describing female nudity as improperly sexual than scripts describing male nudity, and that ChatGPT was more likely to rate scripts that included descriptions of disabilities and mental illness as violent, although the researchers say that both trends need to be further investigated.

“Ironically,” says Metaxa, “the groups that are likely to be hurt by hate speech that might spew from an LLM without guardrails are the same groups that are going to be hurt by over-moderation that restricts an LLM from speaking about certain types of marginalized identities.”

In the context of the recent strike, the researchers affirm the necessity of both content moderation and artistic expression, neither of which they believe should be left entirely in the hands of autonomous systems. “Content moderation is far from a solved problem and undeniably important,” the researchers conclude. “But the solution to these issues must not be censorship.”

This study was conducted at the University of Pennsylvania School of Engineering and Applied Science, Haverford College and The Pennsylvania State University.

More information:

Yaaseen Mahomed et al, Auditing GPT’s Content Moderation Guardrails: Can ChatGPT Write Your Favorite TV Show?, The 2024 ACM Conference on Fairness, Accountability, and Transparency (2024). DOI: 10.1145/3630106.3658932

University of Pennsylvania

Censoring creativity: The limits of ChatGPT for scriptwriting (2024, June 12)

retrieved 12 June 2024

from https://techxplore.com/news/2024-06-censoring-creativity-limits-chatgpt-scriptwriting.html

part may be reproduced without the written permission. The content is provided for information purposes only.