Getting robots to perform even a simple task requires a great deal of behind-the-scenes work. Part of the challenge is planning and executing movements, everything from turning wheels to lifting a robotic arm. To make this happen, roboticists collaborate with programmers to develop a set of trajectories—or pathways—that are clear of obstacles and doable for the robot.

Researchers at Carnegie Mellon University’s Robotics Institute (RI) are creating new ways to chart these trajectories.

William Zhi, a postdoctoral fellow in RI, worked with Ph.D. student Tianyi Zhang and RI Director Matthew Johnson-Roberson to come up with a way to use sketches to show robots how to move. The team will present their work, which is published on the arXiv preprint server, at the IEEE International Conference on Robotics and Automation in Yokohama, Japan.

“Traditional approaches to generate robot motion trajectories require specific programming of the robot,” said Zhi. “Humans can infer complex instructions through sketches. We seek to empower robots to do the same.”

Recently, there’s been preliminary work that studies using natural language to control robots, but researchers have primarily been testing different ways to teach robots to learn through demonstration.

There are two main ways to go about this. One method relies on kinesthetic teaching, where a human records where the robot goes and then physically adjusts the robot to place its joints into the desired positions. The other approach is teleoperation, where the user manipulates the robot with a specialized remote controller or a joystick and then records the demonstration for the robot to copy.

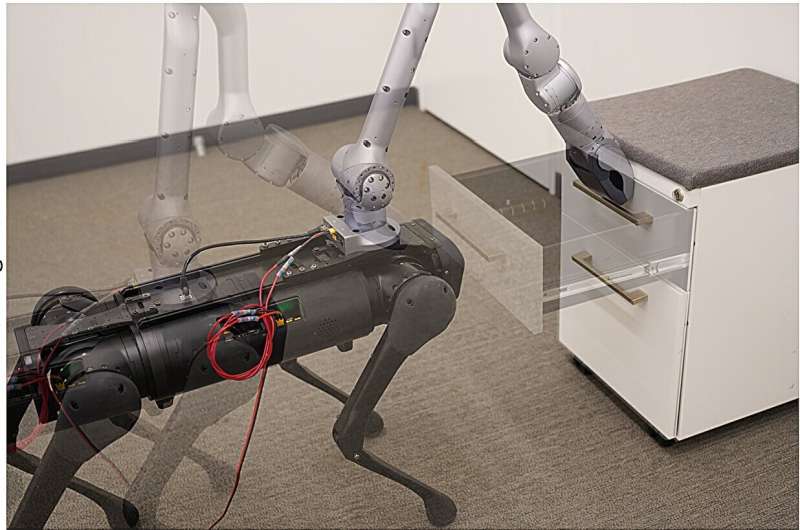

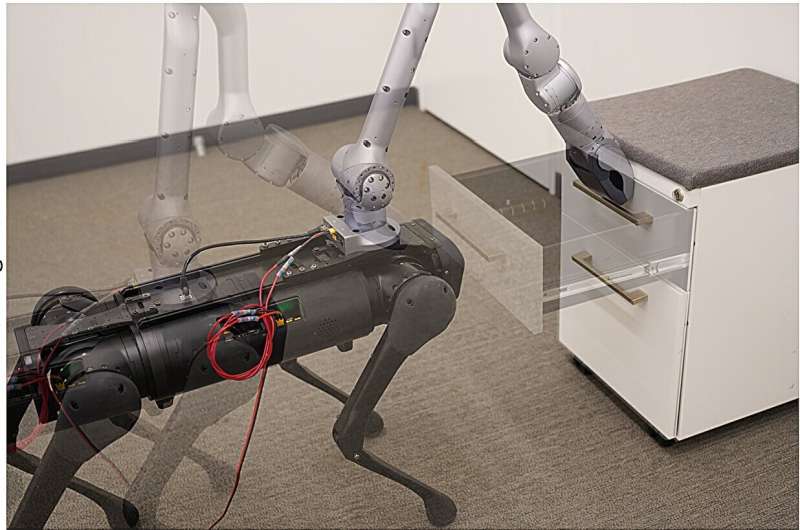

Both methods have their drawbacks. Kinesthetic teaching, specifically, requires the user to be in the same space as the robot. More notably, it’s difficult to manually adjust some robots—and that difficulty only increases with mobile robots, like a quadruped robot with an arm attached to it. Teleoperation requires the user to have precise control and takes time to put a robot through its paces.

The RI team’s approach of sketching trajectories teaches the robot how to move without the drawbacks of kinesthetic teaching or teleoperation. In this novel method, the robot learns from movements drawn on an image of the environment where it will work.

To capture the image of the environment, the team placed cameras at two locations to take pictures from different perspectives. They then sketched the trajectory of the robot’s desired movement on the image and converted the 2D images into 3D models the robot could understand. They did this conversion through a technique called ray tracing, which uses light and shadows on objects to estimate their distance from the camera.

Once the team generated the 3D models, they gave them to the robot to follow. In the case of the quadruped robot equipped with a robotic arm, the researchers sketched three motion trajectories on each of the photographs they took, demonstrating how the arm should move. They converted the images to 3D models using ray tracing and the arm then learned to follow these trajectories in the real world.

Using this technique, the team has trained its quadruped robot to close drawers, sketch the letter “B,” tip over a box and more. They have also programmed the robot to open its gripper at the end of certain trajectories, which allows it to drop objects into boxes or cups. Moreover, they can generalize the movements that they teach the robot across many separate tasks.

“We’re able to teach the robot to do something and then switch it to a different starting position, and it can take the same action,” said Zhi. “We can get quite precise results.”

Currently, this method only works on robots with rigid joints and not soft robots, because it needs to account for joint angles and how they correspond to various points in space. But dealing with hardware comes with its own set of challenges. During their experiments, the quadruped would sometimes lose its balance after executing a motion like extending its arm to close a drawer. That’s one of the parameters the team is working on for the program’s next iteration.

“People in the field have focused more on the algorithm around generating better motions from the demonstration. This research is the inception of us using trajectory sketches to instruct robots,” said Zhi. “We envision in manufacturing settings, where you have someone unskilled at programming robots, enabling them to just sketch on an iPad and then do collaborative things with the robot is where this work is likely to go in the future.”

More information:

Weiming Zhi et al, Instructing Robots by Sketching: Learning from Demonstration via Probabilistic Diagrammatic Teaching, arXiv (2023). DOI: 10.48550/arxiv.2309.03835

Carnegie Mellon University

Teaching robots to move by sketching trajectories (2024, May 8)

retrieved 8 May 2024

from https://techxplore.com/news/2024-05-robots-trajectories.html

part may be reproduced without the written permission. The content is provided for information purposes only.

Getting robots to perform even a simple task requires a great deal of behind-the-scenes work. Part of the challenge is planning and executing movements, everything from turning wheels to lifting a robotic arm. To make this happen, roboticists collaborate with programmers to develop a set of trajectories—or pathways—that are clear of obstacles and doable for the robot.

Researchers at Carnegie Mellon University’s Robotics Institute (RI) are creating new ways to chart these trajectories.

William Zhi, a postdoctoral fellow in RI, worked with Ph.D. student Tianyi Zhang and RI Director Matthew Johnson-Roberson to come up with a way to use sketches to show robots how to move. The team will present their work, which is published on the arXiv preprint server, at the IEEE International Conference on Robotics and Automation in Yokohama, Japan.

“Traditional approaches to generate robot motion trajectories require specific programming of the robot,” said Zhi. “Humans can infer complex instructions through sketches. We seek to empower robots to do the same.”

Recently, there’s been preliminary work that studies using natural language to control robots, but researchers have primarily been testing different ways to teach robots to learn through demonstration.

There are two main ways to go about this. One method relies on kinesthetic teaching, where a human records where the robot goes and then physically adjusts the robot to place its joints into the desired positions. The other approach is teleoperation, where the user manipulates the robot with a specialized remote controller or a joystick and then records the demonstration for the robot to copy.

Both methods have their drawbacks. Kinesthetic teaching, specifically, requires the user to be in the same space as the robot. More notably, it’s difficult to manually adjust some robots—and that difficulty only increases with mobile robots, like a quadruped robot with an arm attached to it. Teleoperation requires the user to have precise control and takes time to put a robot through its paces.

The RI team’s approach of sketching trajectories teaches the robot how to move without the drawbacks of kinesthetic teaching or teleoperation. In this novel method, the robot learns from movements drawn on an image of the environment where it will work.

To capture the image of the environment, the team placed cameras at two locations to take pictures from different perspectives. They then sketched the trajectory of the robot’s desired movement on the image and converted the 2D images into 3D models the robot could understand. They did this conversion through a technique called ray tracing, which uses light and shadows on objects to estimate their distance from the camera.

Once the team generated the 3D models, they gave them to the robot to follow. In the case of the quadruped robot equipped with a robotic arm, the researchers sketched three motion trajectories on each of the photographs they took, demonstrating how the arm should move. They converted the images to 3D models using ray tracing and the arm then learned to follow these trajectories in the real world.

Using this technique, the team has trained its quadruped robot to close drawers, sketch the letter “B,” tip over a box and more. They have also programmed the robot to open its gripper at the end of certain trajectories, which allows it to drop objects into boxes or cups. Moreover, they can generalize the movements that they teach the robot across many separate tasks.

“We’re able to teach the robot to do something and then switch it to a different starting position, and it can take the same action,” said Zhi. “We can get quite precise results.”

Currently, this method only works on robots with rigid joints and not soft robots, because it needs to account for joint angles and how they correspond to various points in space. But dealing with hardware comes with its own set of challenges. During their experiments, the quadruped would sometimes lose its balance after executing a motion like extending its arm to close a drawer. That’s one of the parameters the team is working on for the program’s next iteration.

“People in the field have focused more on the algorithm around generating better motions from the demonstration. This research is the inception of us using trajectory sketches to instruct robots,” said Zhi. “We envision in manufacturing settings, where you have someone unskilled at programming robots, enabling them to just sketch on an iPad and then do collaborative things with the robot is where this work is likely to go in the future.”

More information:

Weiming Zhi et al, Instructing Robots by Sketching: Learning from Demonstration via Probabilistic Diagrammatic Teaching, arXiv (2023). DOI: 10.48550/arxiv.2309.03835

Carnegie Mellon University

Teaching robots to move by sketching trajectories (2024, May 8)

retrieved 8 May 2024

from https://techxplore.com/news/2024-05-robots-trajectories.html

part may be reproduced without the written permission. The content is provided for information purposes only.