Motor impairments currently affect about 5 million people in the United States. Physically assistive robots not only have the potential to help these individuals with daily tasks, they can significantly increase independence, well-being and quality of life.

Large language models (LLMs) that can both comprehend and generate human language and code have been crucial to effective human-robot communication. A group of researchers at the Carnegie Mellon University Robotics Institute recognized the importance of LLMs and determined that further developing innovative interfaces will enhance communication between individuals and assistive robots, leading to improved care for those affected by motor impairments.

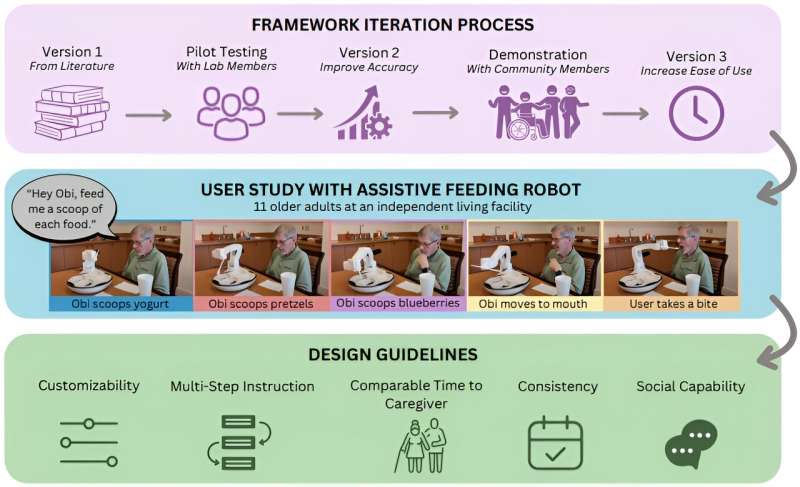

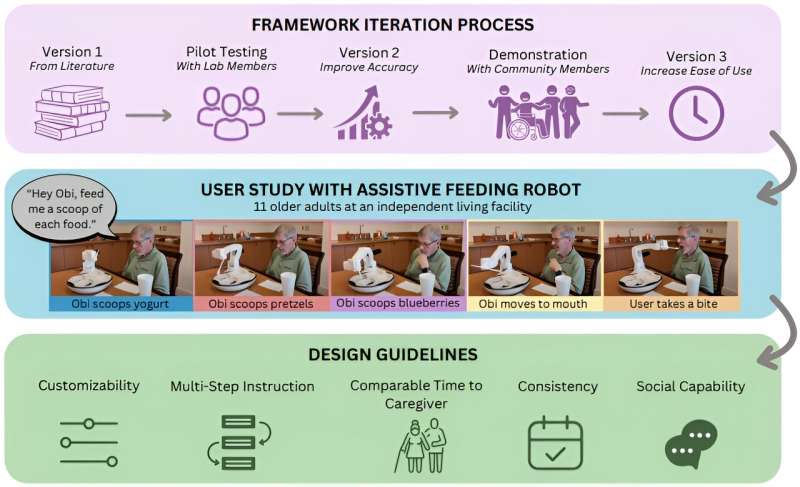

The research group, comprising faculty and students from the Robotic Caregiving and Human Interaction lab (RCHI), the Human And Robot Partners lab (HARP), and the Soft Machines Lab (SML), proposed VoicePilot, a framework and design guidelines for incorporating LLMs as speech interfaces for physically assistive robots.

As experts in human-robot interaction, the team ensured their approach was human-centric, making VoicePilot the first work to involve human subjects interacting directly with the LLM integrated into a physically assistive robot.

The VoicePilot paper was accepted for publication at the Symposium on User Interface Software and Technology (UIST 2024), which will be held in Pittsburgh in October. It is available on the arXiv preprint server.

“We believe that LLMs are the key to developing personalizable and robust speech interfaces for assistive robots that can provide robots with the ability to interpret high-level commands and nuanced customizations,” said Jessie Yuan, co-author and undergraduate student in the Robotic Caregiving and Human Interaction Lab.

The group implemented their LLM-based speech interface on Obi, the commercially available assistive feeding robot. Using Obi, the goal was for users to give personalized instructions much like they would to a human caregiver and have those personalized instructions carried out successfully.

To test the effectiveness of VoicePilot, the team conducted their human study with 11 older adults residing in an independent living facility. Using predefined tasks, an open feeding session, and an analysis on audio recording collected during the study, they gathered data to provide design guidelines for the incorporation of LLMs in assistive interfaces.

The team used the collected data to determine five main guidelines for integrating LLMs as speech interfaces: The integration should offer customization options, execute multiple functions sequentially, execute commands with speeds comparable to a caregiver, execute commands consistently, and should have the ability to socially engage with the user.

“Our proposed framework and guidelines will aid researchers, engineers, and designers both in academia and industry with developing LLM-based speech interfaces for assistive robots,” said Akhil Padmanabha, co-author and Ph.D. student at the Robotics Institute.

More information:

Akhil Padmanabha et al, VoicePilot: Harnessing LLMs as Speech Interfaces for Physically Assistive Robots, arXiv (2024). DOI: 10.48550/arxiv.2404.04066

Carnegie Mellon University

VoicePilot framework enhances communication between humans and physically assistive robots (2024, August 29)

retrieved 29 August 2024

from https://techxplore.com/news/2024-08-voicepilot-framework-communication-humans-physically.html

part may be reproduced without the written permission. The content is provided for information purposes only.

Motor impairments currently affect about 5 million people in the United States. Physically assistive robots not only have the potential to help these individuals with daily tasks, they can significantly increase independence, well-being and quality of life.

Large language models (LLMs) that can both comprehend and generate human language and code have been crucial to effective human-robot communication. A group of researchers at the Carnegie Mellon University Robotics Institute recognized the importance of LLMs and determined that further developing innovative interfaces will enhance communication between individuals and assistive robots, leading to improved care for those affected by motor impairments.

The research group, comprising faculty and students from the Robotic Caregiving and Human Interaction lab (RCHI), the Human And Robot Partners lab (HARP), and the Soft Machines Lab (SML), proposed VoicePilot, a framework and design guidelines for incorporating LLMs as speech interfaces for physically assistive robots.

As experts in human-robot interaction, the team ensured their approach was human-centric, making VoicePilot the first work to involve human subjects interacting directly with the LLM integrated into a physically assistive robot.

The VoicePilot paper was accepted for publication at the Symposium on User Interface Software and Technology (UIST 2024), which will be held in Pittsburgh in October. It is available on the arXiv preprint server.

“We believe that LLMs are the key to developing personalizable and robust speech interfaces for assistive robots that can provide robots with the ability to interpret high-level commands and nuanced customizations,” said Jessie Yuan, co-author and undergraduate student in the Robotic Caregiving and Human Interaction Lab.

The group implemented their LLM-based speech interface on Obi, the commercially available assistive feeding robot. Using Obi, the goal was for users to give personalized instructions much like they would to a human caregiver and have those personalized instructions carried out successfully.

To test the effectiveness of VoicePilot, the team conducted their human study with 11 older adults residing in an independent living facility. Using predefined tasks, an open feeding session, and an analysis on audio recording collected during the study, they gathered data to provide design guidelines for the incorporation of LLMs in assistive interfaces.

The team used the collected data to determine five main guidelines for integrating LLMs as speech interfaces: The integration should offer customization options, execute multiple functions sequentially, execute commands with speeds comparable to a caregiver, execute commands consistently, and should have the ability to socially engage with the user.

“Our proposed framework and guidelines will aid researchers, engineers, and designers both in academia and industry with developing LLM-based speech interfaces for assistive robots,” said Akhil Padmanabha, co-author and Ph.D. student at the Robotics Institute.

More information:

Akhil Padmanabha et al, VoicePilot: Harnessing LLMs as Speech Interfaces for Physically Assistive Robots, arXiv (2024). DOI: 10.48550/arxiv.2404.04066

Carnegie Mellon University

VoicePilot framework enhances communication between humans and physically assistive robots (2024, August 29)

retrieved 29 August 2024

from https://techxplore.com/news/2024-08-voicepilot-framework-communication-humans-physically.html

part may be reproduced without the written permission. The content is provided for information purposes only.